On this page, you can find (extended) benchmark results accompanying paper submissions.

QComp 2020 [1]

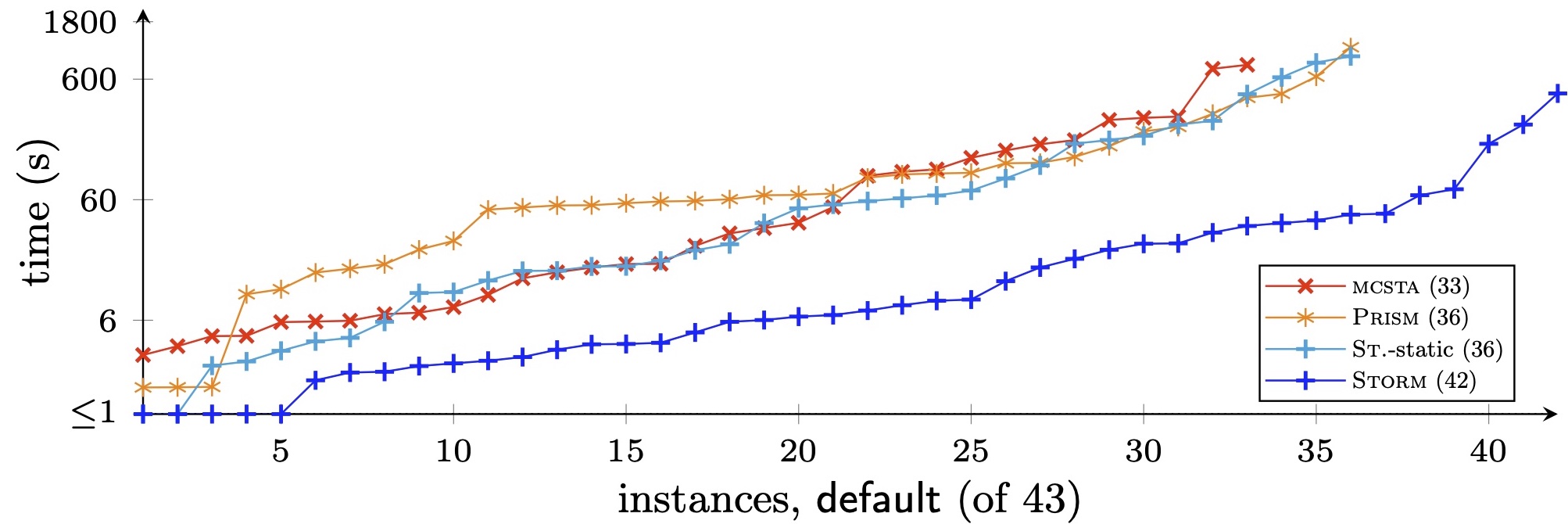

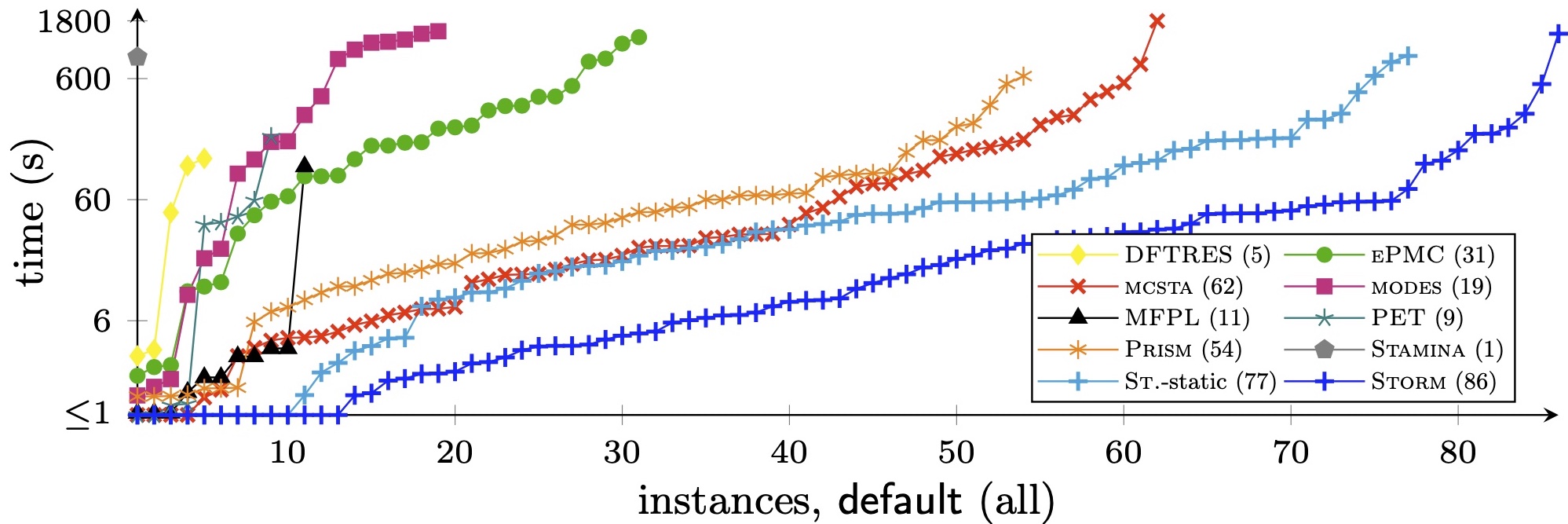

Storm participated in the 2020 Comparison of Tools for the Analysis of Quantitative Formal Models (QComp 2020). Storm participated in two modes: with the new automatic engine selection and with static engine selection.

Result summary for the ε-correct track:

Result summary for the often ε-correct track:

Further results for all tracks can be found in the competition report and the detailed result tables on the QComp 2020 website.

The Probabilistic Model Checker Storm [2]

Setup

The benchmarks were run on 4 cores of an Intel Xeon Platinum 8160 Processor with 12GB of memory available. The timeout was 1800 seconds.

Benchmarks

All benchmarks from QComp 2019 were considered, except for 4 PTA Benchmarks that were not compatible with Storm.

Results

Show interactive result table Download raw data and replication package

QComp 2019 [3]

Storm participated in the 2019 Comparison of Tools for the Analysis of Quantitative Formal Models (QComp 2019). Details on the competition, the participating tools and the QComp benchmark set can be found on the competition website. Detailed results are available in the interactive results table.

A Storm is Coming: A Modern Probabilistic Model Checker [4]

Setup

The benchmarks were conducted on a HP BL685C G7. All tools had up to eight cores with 2.0GHz and 8GB of memory available, but only the Java garbage collection of PRISM and EPMC used more than one core. The timeout was set to 1800 seconds.

Benchmarks

In this paper, we use all non-PTA models from the PRISM benchmark suite and the IMCA benchmarks for MAs.

Results

In order to share the results, we provide them as a set of log files. To make the results more accessible, we also give four tables (one for each model type: DTMC, CTMC, MDP and MA). Using the buttons near the top of the table, you can select which of the configurations of the tools are displayed side-by-side (by default all configurations of participating tools are shown). For example, clicking Storm-sparse toggles whether Storm’s sparse engine participates in the comparison or not. As argued in the paper, it makes sense to compare “matching engines” of the tools. (For an explanation which engines are comparable, please consult the paper.) This is why there are also buttons that let you select the tool configurations that are comparable with one click (Show sparse, Show hybrid, Show dd and Show exact). The best time for each instance is marked green. By clicking on the time for a particular experiment, you are taken to the log file of that experiment.

The log files were obtained using a version of Storm that represents “infinity” as “-1” in --exact mode. This is,

however just a displaying issue. Newer versions of Storm correctly display “inf” as the result.

Show DTMC table Show CTMC table Show MDP table Show MA table Show log files

References

- Carlos E. Budde et al., “On Correctness, Precision, and Performance in Quantitative Verification

- QComp 2020 Competition Report,” in ISoLA (4), 2020.

- Christian Hensel, Sebastian Junges, Joost-Pieter Katoen, Tim Quatmann, and Matthias Volk, “The probabilistic model checker Storm,” Int. J. Softw. Tools Technol. Transf., no. 4, 2022.

- Ernst Moritz Hahn et al., “The 2019 Comparison of Tools for the Analysis of Quantitative Formal

Models - (QComp 2019 Competition Report),” in TACAS (3), 2019.

- Christian Dehnert, Sebastian Junges, Joost-Pieter Katoen, and Matthias Volk, “A Storm is Coming: A Modern Probabilistic Model Checker,” in CAV (2), 2017.